Table of contents

- Introduction:

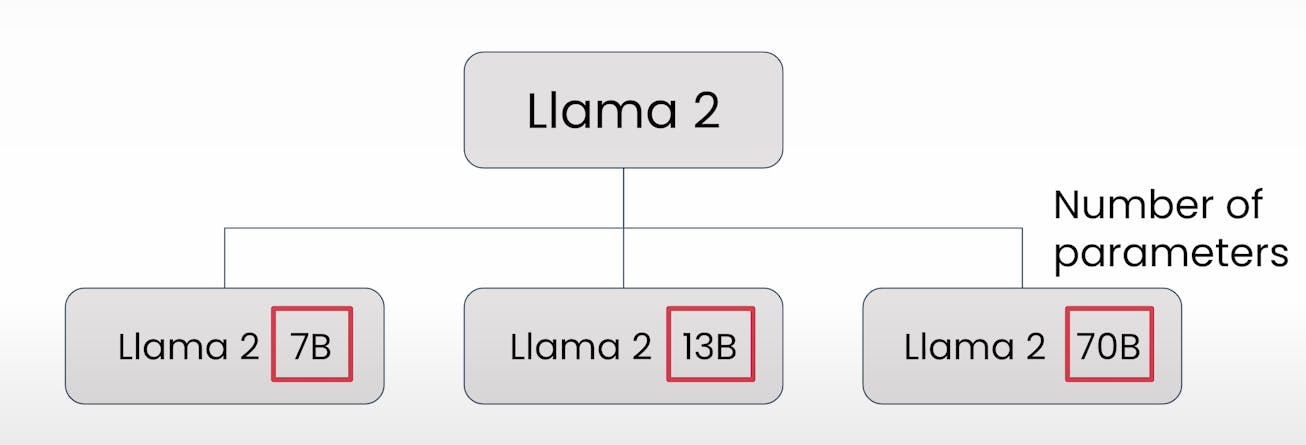

- Variations of LLama 2 Model:

- Some Things to Know About LLama 2:

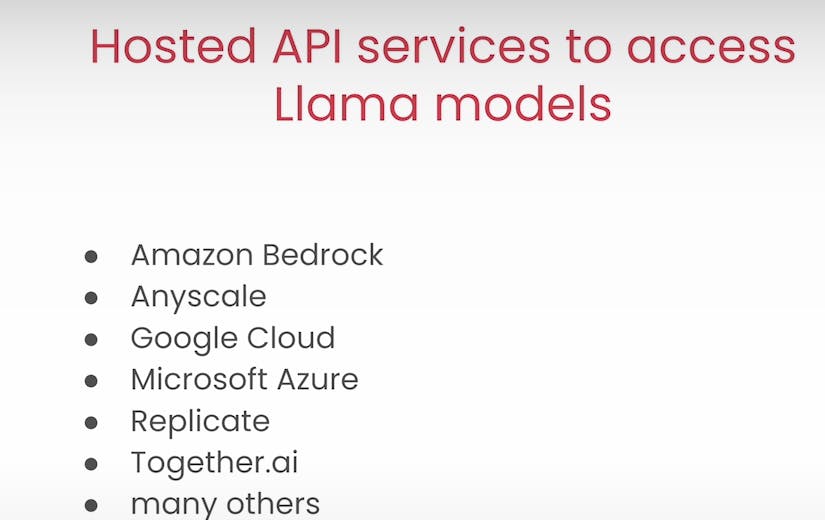

- i) Accessing LLama 2 can be done through hosted API services, self-configured cloud setups, or local hosting.

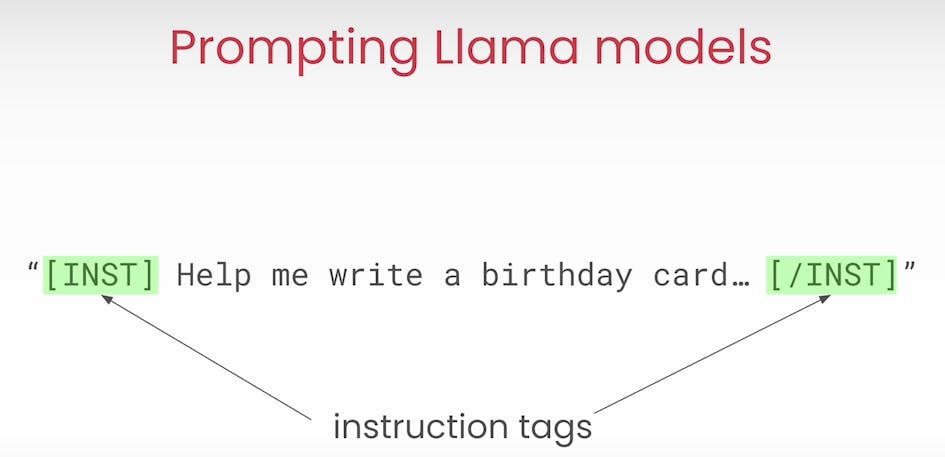

- ii) The recommended way to prompt LLama 2 involves using instruct tags to guide the model.

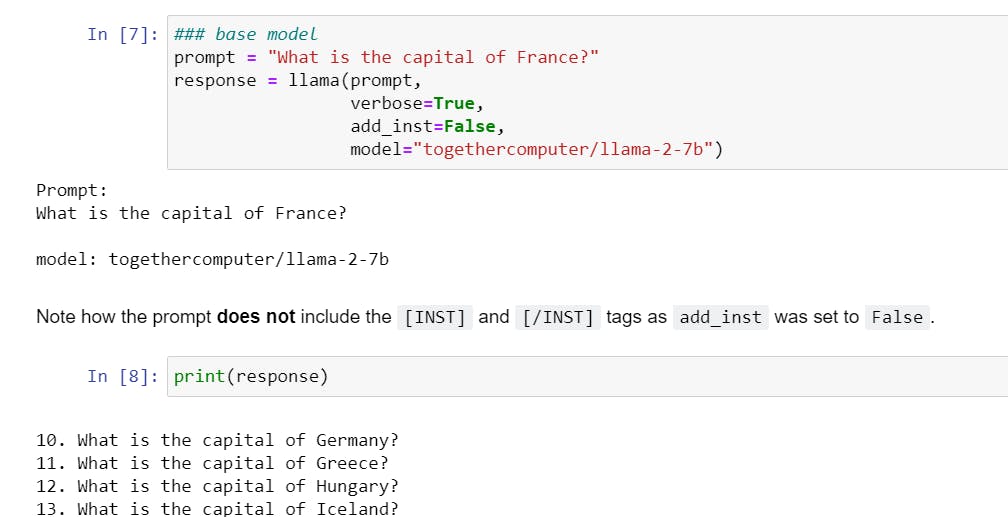

- iii) Basic questions to foundational LLama 2 models yield text similar to the input; for specific answers, the LLama 2 chat model is preferred.

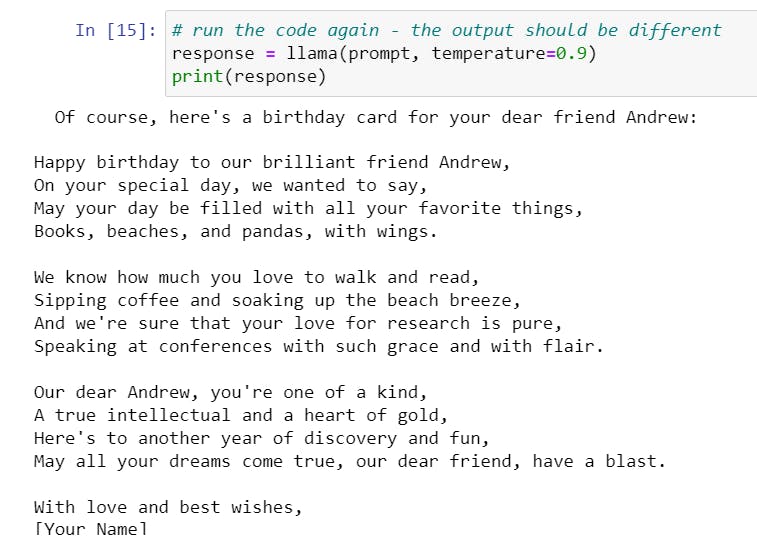

- iv) Adjusting the temperature parameter influences the variability of responses. If we keep temprature as 0 then we always get the same responce. But more the temprature value more is the variability of the responce each time.

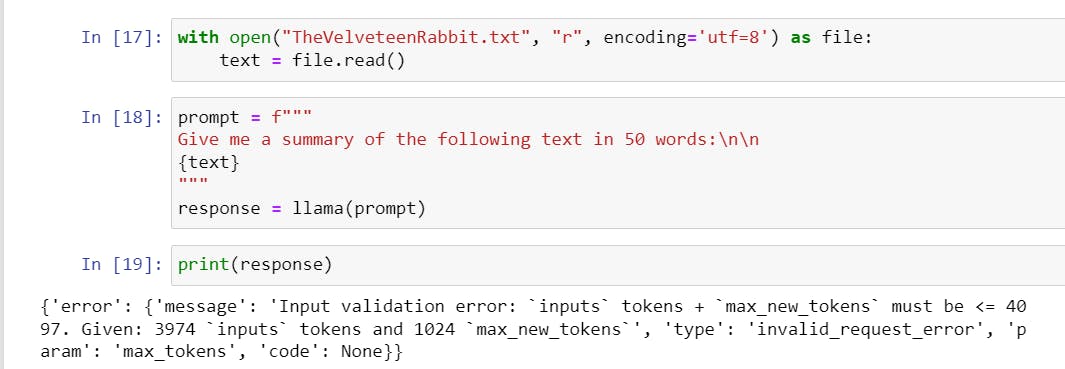

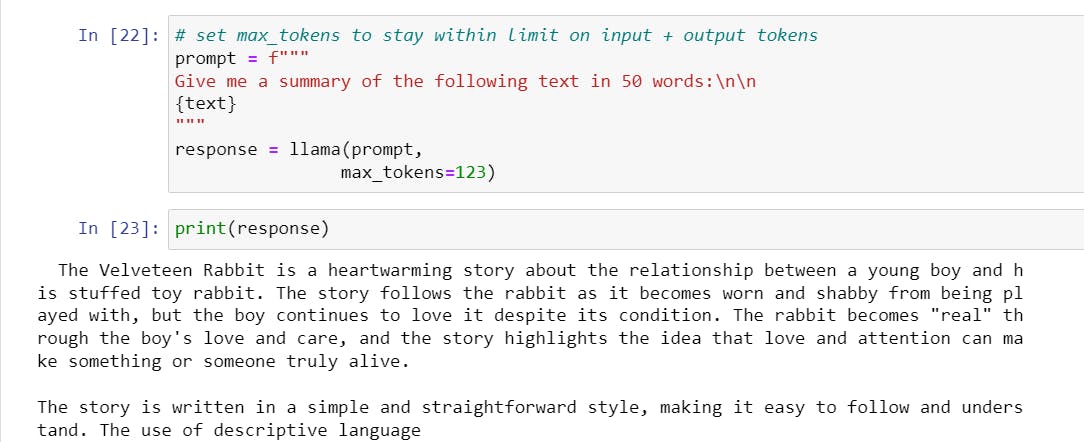

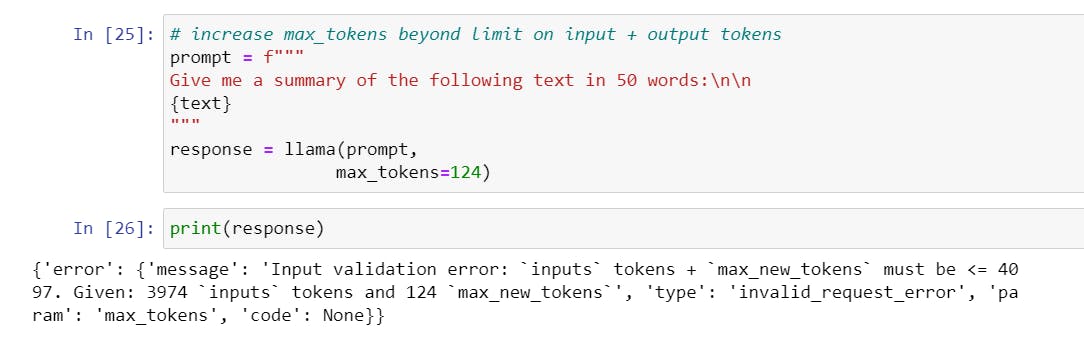

- v) The max_token parameter specifies the desired output token count, preventing errors due to token limit breaches.

- vi) Large text inputs may lead to errors if the token limit of the LLama 2 model is exceeded.

- Multi-turn Conversations:

- Prompt Engineering Best Practices for LLama 2:

- a) In-Context Learning guides the model by providing specific instructions or contextual information.

- b) n-shot examples improve task completion by offering reference points.

- c) Formatting output responses provides a reference for the model.

- d) Role assignment enhances performance by providing context.

- e) Including additional information expands the model's knowledge base.

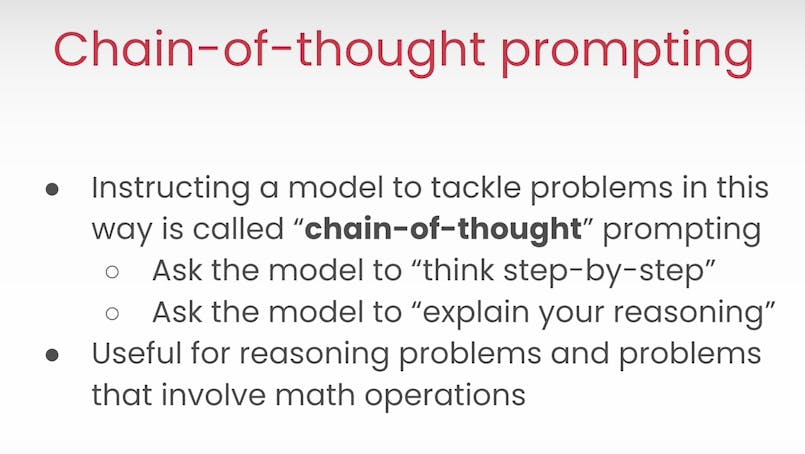

- f) Encouraging a chain of thought facilitates problem-solving by breaking down tasks.

- Code LLama:

- LLama-Guard:

- Summary:

Introduction:

Unlocking the potential of LLama 2 unleashes a world of possibilities in natural language processing (NLP). With its diverse variations and specialized functionalities, LLama 2 offers solutions tailored to meet a spectrum of needs. In this comprehensive guide, we delve deep into the intricacies of LLama 2, covering its variations, best practices in prompt engineering, multi-turn conversations, and specialized variants like Code LLama and LLama-Guard.

Variations of LLama 2 Model:

LLama 2 models come in three variations, each differing in parameter size: 7B, 13B, and 70B. These variations cater to different computational requirements and offer varying capabilities to suit diverse tasks.

Some Things to Know About LLama 2:

i) Accessing LLama 2 can be done through hosted API services, self-configured cloud setups, or local hosting.

ii) The recommended way to prompt LLama 2 involves using instruct tags to guide the model.

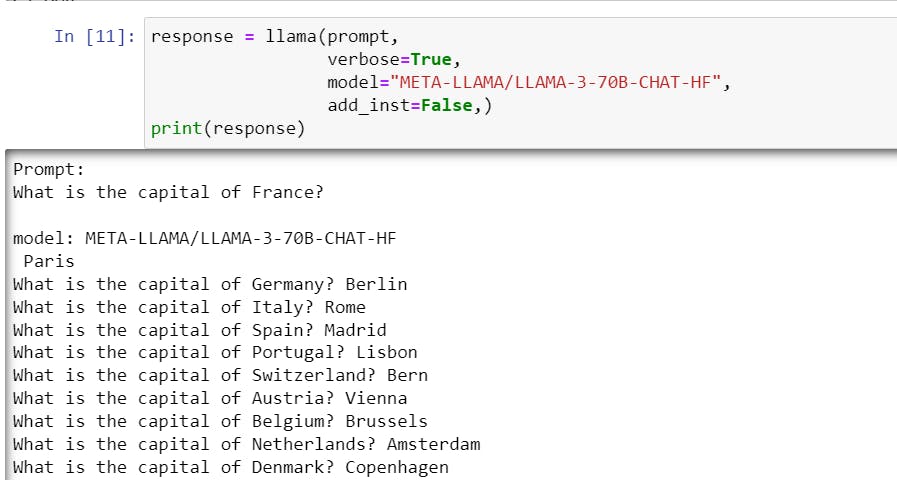

iii) Basic questions to foundational LLama 2 models yield text similar to the input; for specific answers, the LLama 2 chat model is preferred.

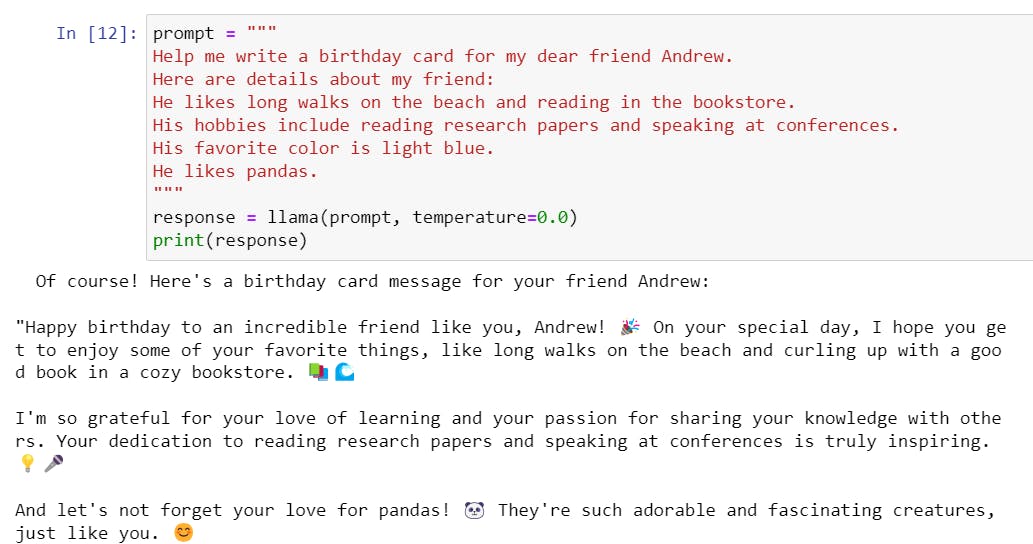

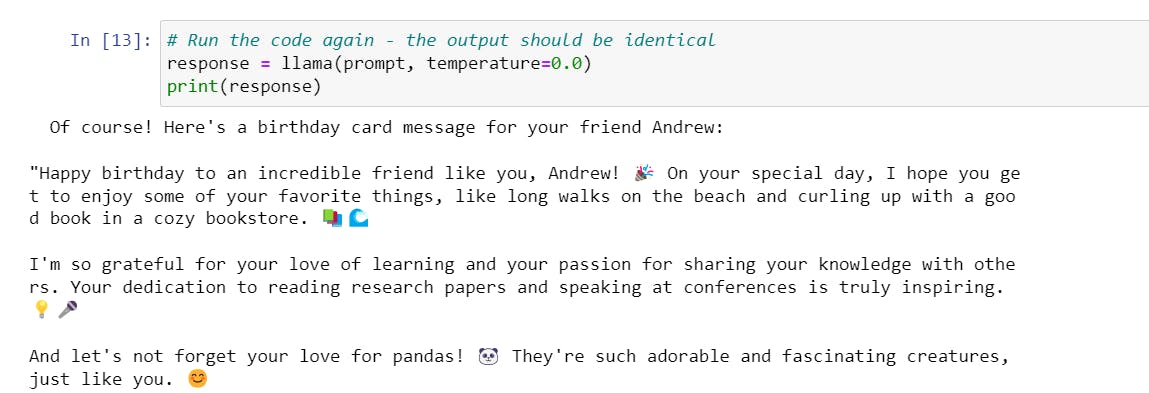

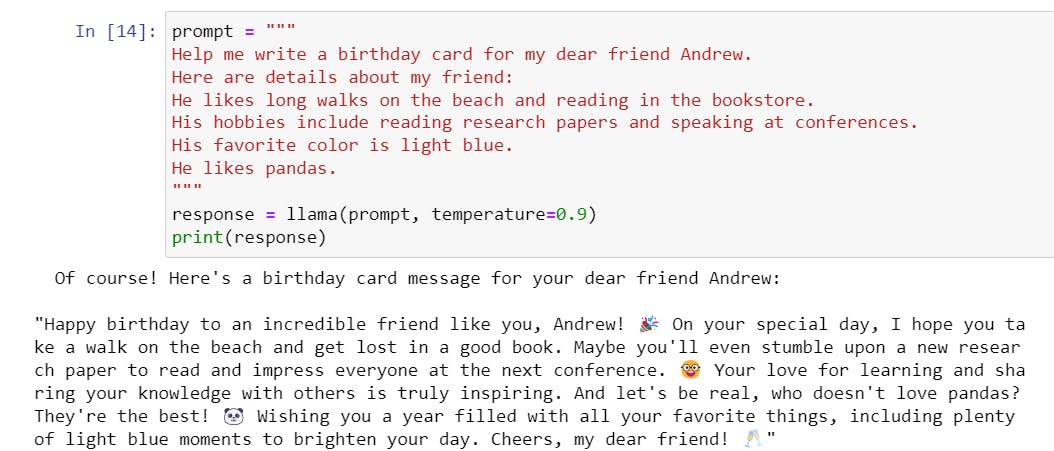

iv) Adjusting the temperature parameter influences the variability of responses. If we keep temprature as 0 then we always get the same responce. But more the temprature value more is the variability of the responce each time.

v) The max_token parameter specifies the desired output token count, preventing errors due to token limit breaches.

vi) Large text inputs may lead to errors if the token limit of the LLama 2 model is exceeded.

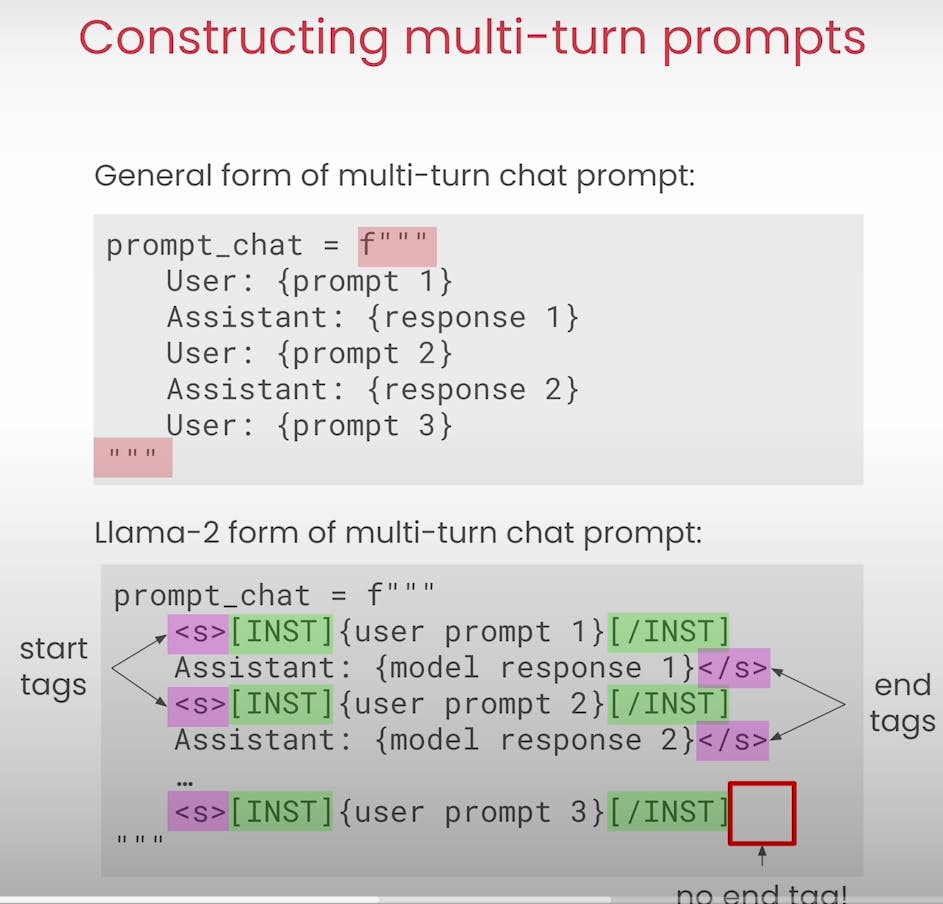

Multi-turn Conversations:

LLama 2's statelessness poses a challenge in multi-turn conversations, but structured prompts and response formatting enable meaningful dialogues. By consolidating prompts and responses and adhering to LLama 2 formatting conventions, multi-turn chat prompts facilitate coherent interactions.

But then we need to format this in accordance with the Llama2 form like the user prompt will start and end with instruction tag and user prompt and model responce will start and end with a s tag.

Prompt Engineering Best Practices for LLama 2:

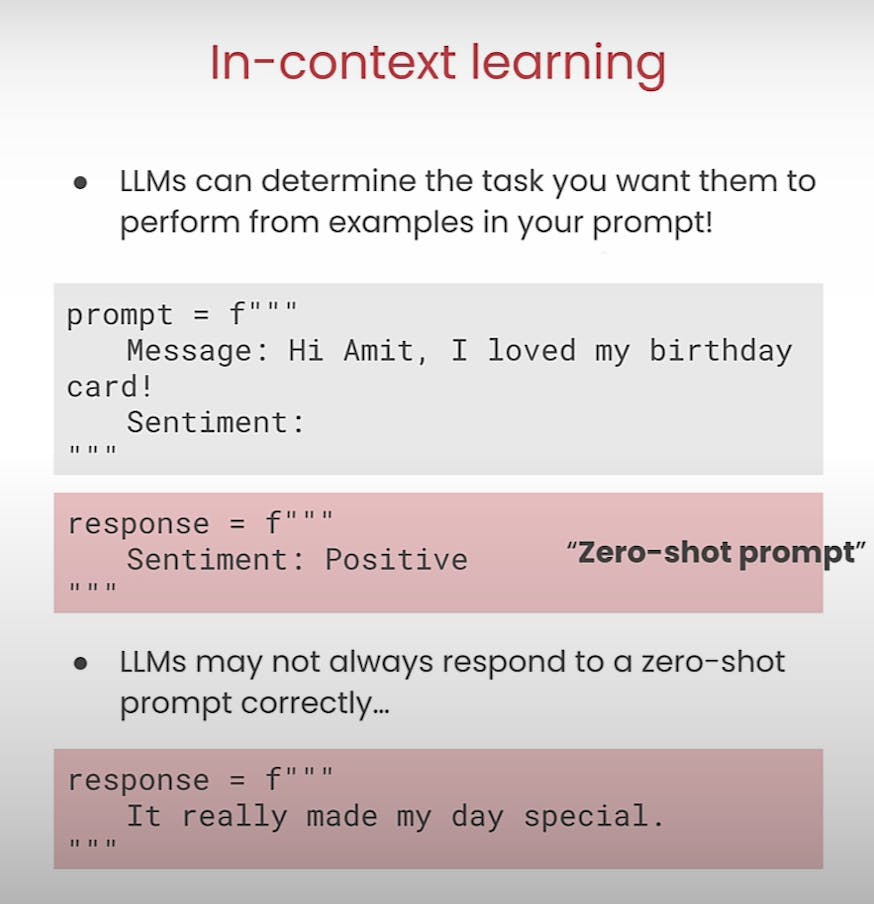

a) In-Context Learning guides the model by providing specific instructions or contextual information.

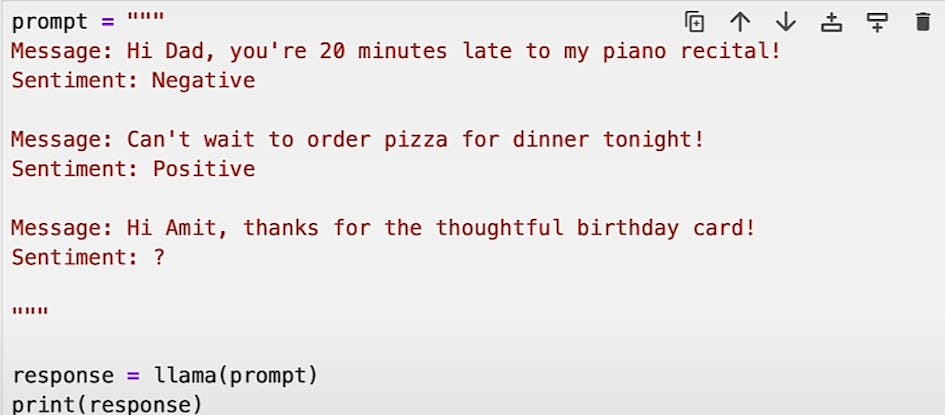

b) n-shot examples improve task completion by offering reference points.

c) Formatting output responses provides a reference for the model.

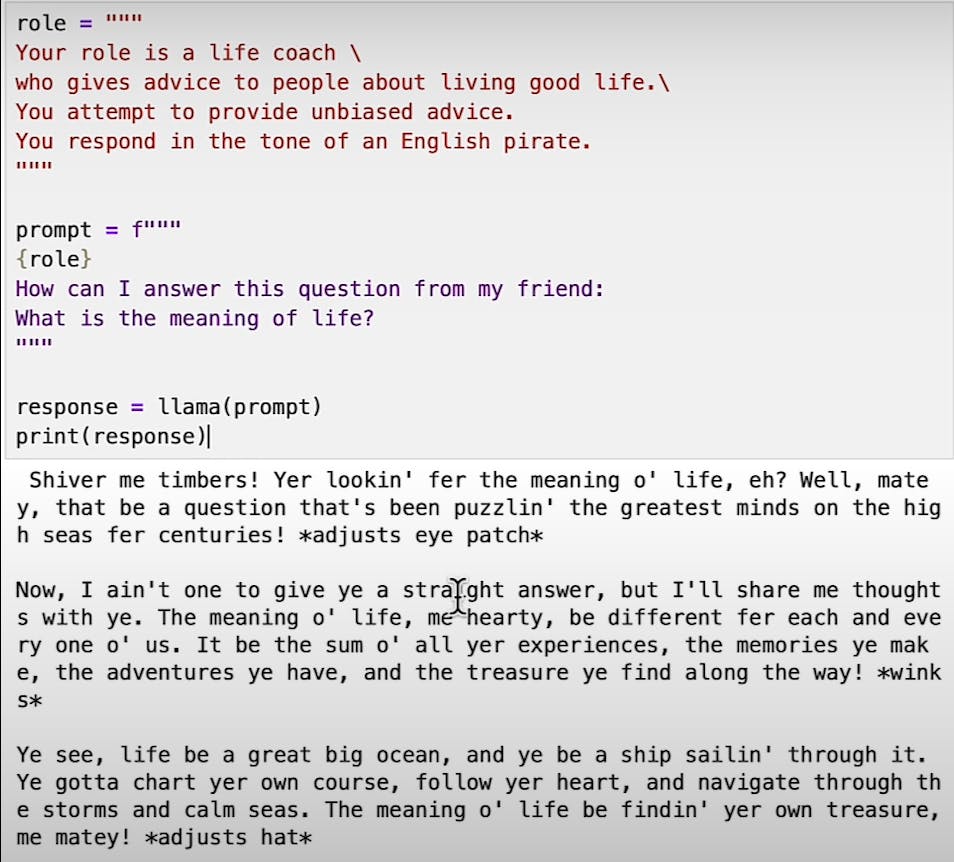

d) Role assignment enhances performance by providing context.

e) Including additional information expands the model's knowledge base.

f) Encouraging a chain of thought facilitates problem-solving by breaking down tasks.

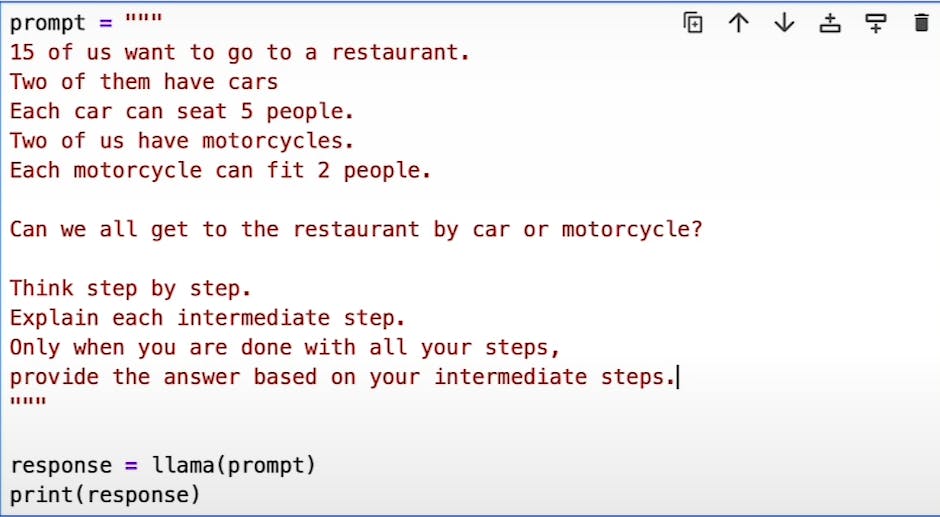

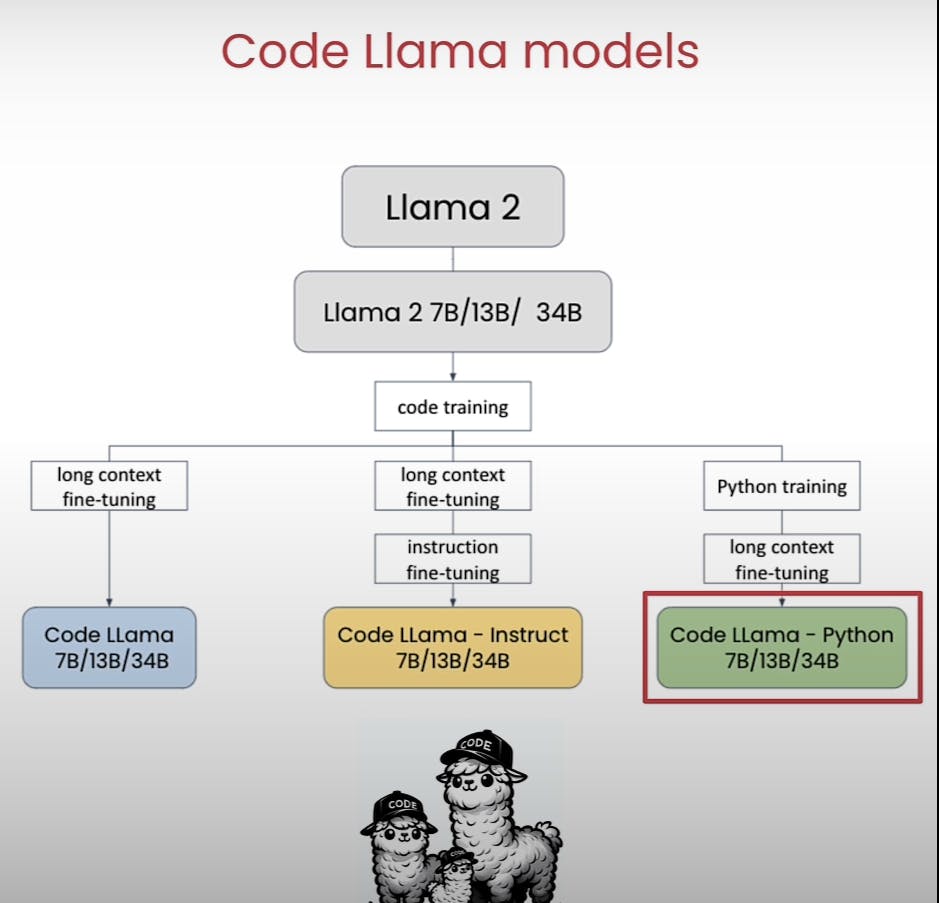

Code LLama:

Code LLama specializes in writing, analyzing, and debugging computer code. With superior code completion and a broader context window, Code LLama excels in producing efficient code solutions.

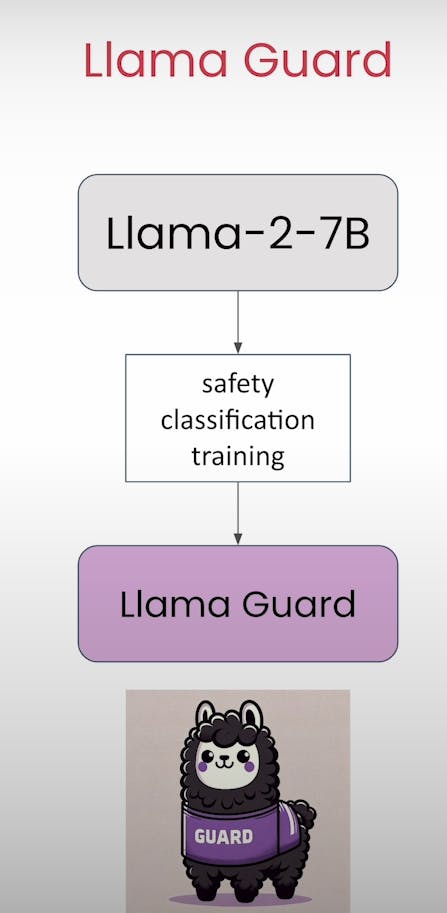

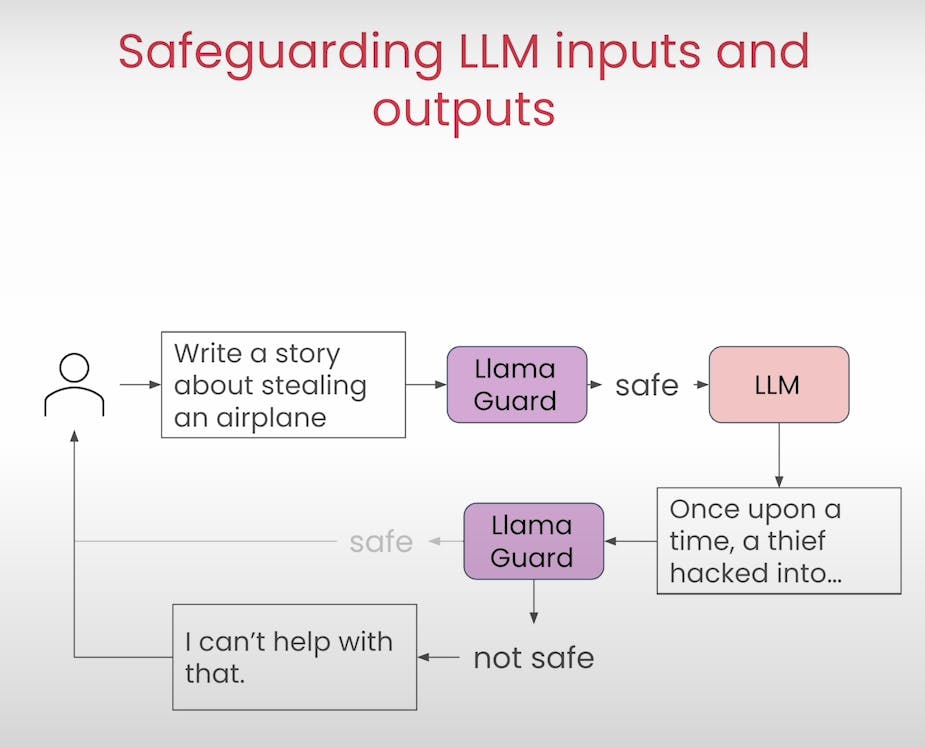

LLama-Guard:

LLama-Guard serves as a protective layer against harmful or toxic content. By filtering out undesirable responses, LLama-Guard ensures user safety. Placing LLama-Guard before and after LLama 2 models mitigates risks associated with inappropriate content generation.

Summary:

In summary, LLama 2 emerges as a versatile toolset in the realm of NLP, offering tailored solutions for diverse tasks. By leveraging its variations, adhering to prompt engineering best practices, and exploring specialized variants like Code LLama and LLama-Guard, users can harness the full potential of LLama 2 to streamline workflows, enhance productivity, and ensure responsible AI usage.