Dynamics of Deep Learning: A Journey through Forward and Backward Propagation

Introduction:

In the intricate tapestry of deep learning, two essential processes govern the evolution of neural networks—Forward Propagation and Backward Propagation. These mechanisms, akin to the synapses firing in our brain, orchestrate the learning and adaptation of artificial intelligence. Let's embark on a comprehensive exploration of these vital components that drive the heart of deep learning.

I. Forward Propagation: Navigating the Neural Pathways

1. What is Forward Propagation in Deep Learning?

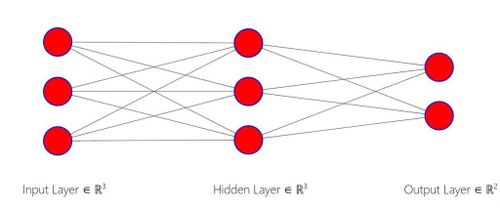

At its essence, forward propagation is the initial phase in a neural network's operation where input data is processed layer by layer, ultimately producing an output. This step sets the stage for understanding the network's predictions and forms the foundation for the subsequent learning process.

2. Example

Consider a simple neural network tasked with recognizing handwritten digits. As the input image propagates forward through the layers, each node processes specific features, gradually refining its understanding. The final layer's output corresponds to the predicted digit.

3. Mathematical Formulas for Forward Propagation

The mathematical underpinnings involve matrix multiplication, activation functions, and bias addition. The output of each layer is computed using the formula:

[ \(\text{Output} = \text{Activation}(\text{Weight} \times \text{Input} + \text{Bias}) \) ]

II. Backward Propagation: Unraveling the Learning Mechanism

1. What is Back Propagation:

a. Define Back Propagation:

Back propagation is the process of adjusting the weights and biases of a neural network based on the calculated error during forward propagation.

b. Explain the Chain Rule of Differentiation:

The chain rule plays a pivotal role in back propagation, allowing us to compute the impact of each weight on the overall error.

c. Use Basic Examples to Explain:

Consider a single-layer network; back propagation entails updating weights to minimize the difference between predicted and actual outcomes.

2. How is Back Propagation Done:

a. Forward Propagation with Activation Function:

During back propagation, the derivative of the activation function is crucial in understanding the impact of each weight.

b. Loss Calculation with the Help of Loss Function:

The difference between predicted and actual outcomes is quantified using a loss function.

c. Weight Update with the Help of Optimizers:

Optimizers, like gradient descent, facilitate iterative weight updates to minimize the loss function. This iterative process refines the network's ability to predict outcomes.

3. Why is Back Propagation Needed:

Back propagation is indispensable in adjusting weights to minimize loss. It transforms the neural network into a flexible system, enabling predictions based on inputs. This dynamic learning mechanism is essential for adapting the network's parameters and enhancing its predictive capabilities.

Conclusion:

In the ever-evolving landscape of deep learning, forward and backward propagation emerge as the dynamic duo driving the relentless pursuit of artificial intelligence. Forward propagation sets the stage, unraveling the intricate layers of neural networks, while backward propagation refines the network's understanding through continuous adjustment of weights and biases. Together, they embody the essence of learning, empowering neural networks to predict, adapt, and evolve in the vast expanse of data-driven possibilities.